Read Input Until There Is No More Lines

This is the third office of the Bash One-Liners Explained article serial. In this part I'll teach you lot all about input/output redirection. I'll use merely the best bash practices, diverse bash idioms and tricks. I want to illustrate how to get various tasks done with simply bash built-in commands and bash programming language constructs.

Come across the starting time part of the serial for introduction. After I'm washed with the series I'll release an ebook (similar to my ebooks on awk, sed, and perl), and besides bash1line.txt (similar to my perl1line.txt).

Also meet my other manufactures about working fast in bash from 2007 and 2008:

- Working Productively in Bash'due south Emacs Command Line Editing Mode (comes with a crook sheet)

- Working Productively in Bash'south Vi Control Line Editing Mode (comes with a cheat canvas)

- The Definitive Guide to Fustigate Command Line History (comes with a cheat canvas)

Permit'due south starting time.

Part III: Redirections

Working with redirections in bash is really easy once y'all realize that it's all about manipulating file descriptors. When bash starts information technology opens the 3 standard file descriptors: stdin (file descriptor 0), stdout (file descriptor 1), and stderr (file descriptor 2). You tin can open more than file descriptors (such as 3, four, 5, ...), and you can close them. You tin can also re-create file descriptors. And you can write to them and read from them.

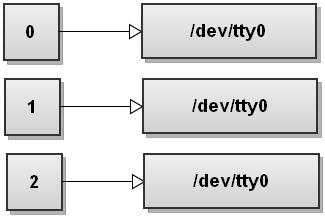

File descriptors always point to some file (unless they're closed). Ordinarily when bash starts all three file descriptors, stdin, stdout, and stderr, bespeak to your terminal. The input is read from what you type in the concluding and both outputs are sent to the last.

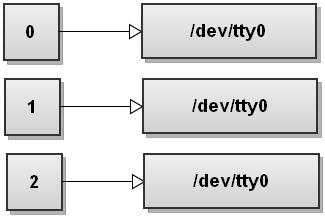

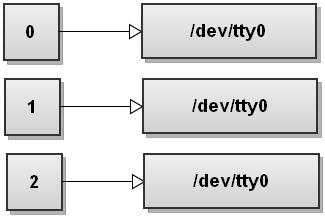

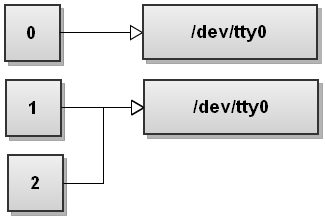

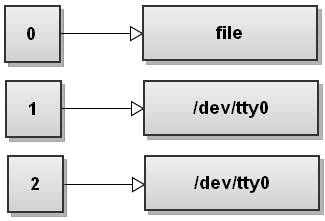

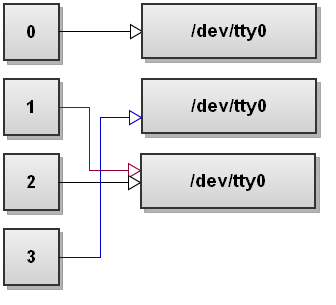

Assuming your terminal is /dev/tty0, hither is how the file descriptor table looks similar when bash starts:

When fustigate runs a control it forks a child process (see man 2 fork) that inherits all the file descriptors from the parent process, then it sets up the redirections that yous specified, and execs the command (come across man iii exec).

To be a pro at bash redirections all you demand to practice is visualize how the file descriptors get changed when redirections happen. The graphics illustrations volition help y'all.

1. Redirect the standard output of a command to a file

$ control >file

Operator > is the output redirection operator. Bash commencement tries to open the file for writing and if information technology succeeds it sends the stdout of control to the newly opened file. If it fails opening the file, the whole command fails.

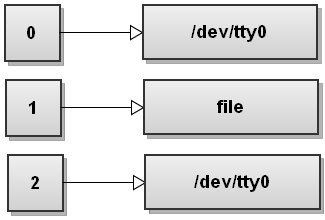

Writing command >file is the same as writing control one>file. The number one stands for stdout, which is the file descriptor number for standard output.

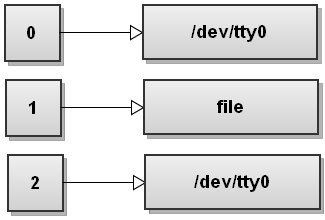

Here is how the file descriptor tabular array changes. Bash opens file and replaces file descriptor one with the file descriptor that points to file. And so all the output that gets written to file descriptor 1 from at present on ends upward being written to file:

In general you can write command n>file, which will redirect the file descriptor n to file.

For instance,

$ ls > file_list

Redirects the output of the ls control to the file_list file.

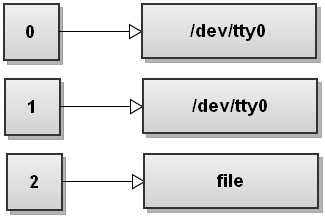

2. Redirect the standard fault of a control to a file

$ control ii> file

Hither fustigate redirects the stderr to file. The number ii stands for stderr.

Here is how the file descriptor table changes:

Bash opens file for writing, gets the file descriptor for this file, and it replaces file descriptor 2 with the file descriptor of this file. So now anything written to stderr gets written to file.

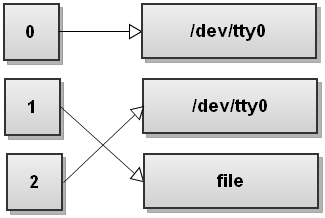

iii. Redirect both standard output and standard error to a file

$ command &>file

This one-liner uses the &> operator to redirect both output streams - stdout and stderr - from command to file. This is bash's shortcut for speedily redirecting both streams to the same destination.

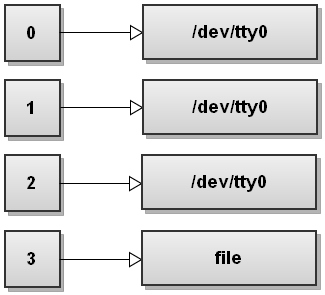

Here is how the file descriptor table looks like after fustigate has redirected both streams:

As you can see both stdout and stderr now point to file. So anything written to stdout and stderr gets written to file.

In that location are several means to redirect both streams to the same destination. You can redirect each stream ane after some other:

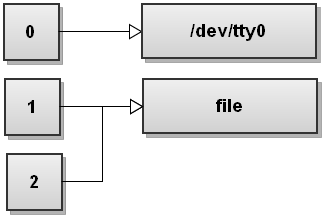

$ control >file 2>&ane

This is a much more mutual manner to redirect both streams to a file. Starting time stdout is redirected to file, and and then stderr is duplicated to be the same equally stdout. And so both streams end up pointing to file.

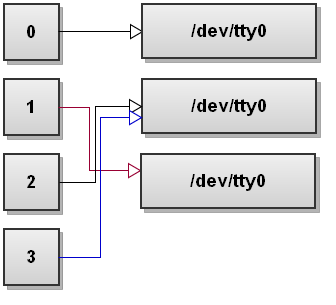

When bash sees several redirections it processes them from left to correct. Let's go through the steps and encounter how that happens. Earlier running whatsoever commands bash'south file descriptor table looks like this:

Now bash processes the offset redirection >file. We've seen this before and it makes stdout bespeak to file:

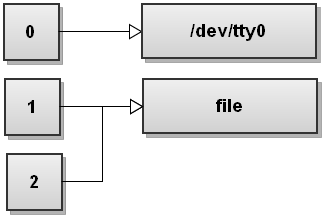

Side by side bash sees the 2nd redirection 2>&1. We haven't seen this redirection before. This one duplicates file descriptor 2 to be a re-create of file descriptor 1 and nosotros go:

Both streams have been redirected to file.

Nonetheless be conscientious here! Writing:

command >file 2>&1

Is not the same as writing:

$ command 2>&1 >file

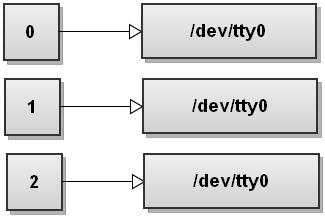

The order of redirects matters in bash! This command redirects just the standard output to the file. The stderr will still print to the terminal. To empathize why that happens, let'southward become through the steps again. And so before running the control the file descriptor table looks like this:

Now fustigate processes redirections left to right. It first sees 2>&1 then information technology duplicates stderr to stdout. The file descriptor tabular array becomes:

Now bash sees the second redirect >file and it redirects stdout to file:

Exercise you lot see what happens here? Stdout now points to file only the stderr still points to the terminal! Everything that gets written to stderr still gets printed out to the screen! And then be very, very careful with the order of redirects!

Also annotation that in fustigate, writing this:

$ control &>file

Is exactly the same as:

$ command >&file

The showtime class is preferred even so.

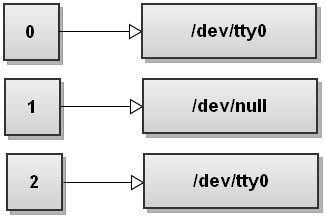

iv. Discard the standard output of a command

$ command > /dev/goose egg

The special file /dev/aught discards all data written to it. So what we're doing here is redirecting stdout to this special file and it gets discarded. Here is how it looks from the file descriptor tabular array's perspective:

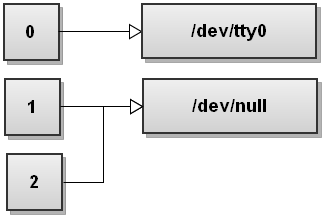

Similarly, by combining the previous one-liners, we tin discard both stdout and stderr by doing:

$ command >/dev/nada 2>&1

Or only but:

$ command &>/dev/naught

File descriptor table for this feat looks similar this:

5. Redirect the contents of a file to the stdin of a command

$ control <file

Here bash tries to open up the file for reading before running whatsoever commands. If opening the file fails, bash quits with error and doesn't run the control. If opening the file succeeds, bash uses the file descriptor of the opened file as the stdin file descriptor for the command.

After doing that the file descriptor table looks like this:

Here is an example. Suppose you lot want to read the commencement line of the file in a variable. You can simply do this:

$ read -r line < file

Bash'due south built-in read command reads a single line from standard input. By using the input redirection operator < nosotros ready information technology up to read the line from the file.

half dozen. Redirect a bunch of text to the stdin of a command

$ command <<EOL your multi-line text goes hither EOL

Here nosotros use the hither-document redirection operator <<Marker. This operator instructs bash to read the input from stdin until a line containing only MARKER is found. At this betoken fustigate passes the all the input read so far to the stdin of the command.

Here is a common case. Suppose you've copied a bunch of URLs to the clipboard and you lot want to remove http:// part of them. A quick i-liner to do this would be:

$ sed 's|http://||' <<EOL http://url1.com http://url2.com http://url3.com EOL

Hither the input of a listing of URLs is redirected to the sed command that strips http:// from the input.

This case produces this output:

url1.com url2.com url3.com

7. Redirect a single line of text to the stdin of a command

$ control <<< "foo bar baz"

For example, let's say yous quickly desire to pass the text in your clipboard every bit the stdin to a command. Instead of doing something like:

$ echo "clipboard contents" | command

You tin at present merely write:

$ command <<< "clipboard contents"

This trick changed my life when I learned it!

8. Redirect stderr of all commands to a file forever

$ exec 2>file $ command1 $ command2 $ ...

This one-liner uses the built-in exec bash control. If y'all specify redirects afterwards it, then they volition terminal forever, meaning until you change them or exit the script/shell.

In this case the two>file redirect is setup that redirects the stderr of the electric current beat out to the file. Running commands subsequently setting up this redirect volition accept the stderr of all of them redirected to file. It's actually useful in situations when you want to accept a complete log of all errors that happened in the script, just you don't want to specify 2>file subsequently every single command!

In full general exec can take an optional argument of a command. If information technology's specified, bash replaces itself with the command. Then what you lot get is merely that control running, and there is no more than shell.

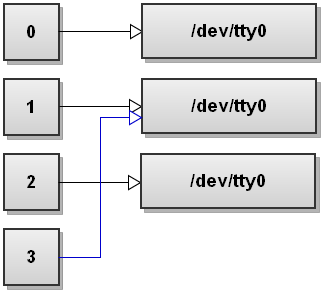

9. Open a file for reading using a custom file descriptor

$ exec 3<file

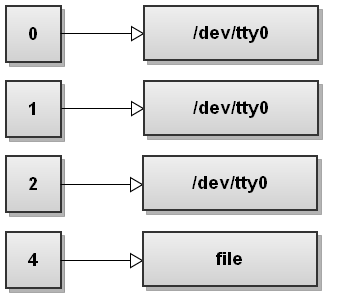

Here we employ the exec control over again and specify the 3<file redirect to it. What this does is opens the file for reading and assigns the opened file-descriptor to the shell'due south file descriptor number iii. The file descriptor table now looks like this:

Now you tin read from the file descriptor 3, like this:

$ read -u 3 line

This reads a line from the file that we just opened equally fd 3.

Or yous can employ regular beat commands such as grep and operate on file descriptor 3:

$ grep "foo" <&3

What happens hither is file descriptor three gets duplicated to file descriptor i - stdin of grep. Just remember that once you read the file descriptor it's been exhausted and you need to close it and open it again to use it. (You lot can't rewind an fd in fustigate.)

Afterward you're washed using fd 3, you can close information technology this fashion:

$ exec 3>&-

Here the file descriptor iii is duped to -, which is bash's special way to say "close this fd".

10. Open a file for writing using a custom file descriptor

$ exec iv>file

Here we simply tell bash to open file for writing and assign it number 4. The file descriptor table looks like this:

As you lot can see file descriptors don't have to exist used in club, y'all can open any file descriptor number you like from 0 to 255.

At present nosotros can simply write to the file descriptor 4:

$ echo "foo" >&4

And nosotros can close the file descriptor 4:

$ exec 4>&-

It'southward then unproblematic at present once nosotros learned how to piece of work with custom file descriptors!

11. Open up a file both for writing and reading

$ exec iii<>file

Hither we use fustigate'southward diamond operator <>. The diamond operator opens a file descriptor for both reading and writing.

So for example, if yous exercise this:

$ repeat "foo bar" > file # write string "foo bar" to file "file". $ exec 5<> file # open up "file" for rw and assign information technology fd v. $ read -n 3 var <&five # read the start 3 characters from fd 5. $ echo $var

This volition output foo as nosotros but read the first 3 chars from the file.

Now we tin write some stuff to the file:

$ echo -n + >&5 # write "+" at 4th position. $ exec five>&- # close fd 5. $ cat file

This volition output foo+bar equally nosotros wrote the + char at 4th position in the file.

12. Send the output from multiple commands to a file

$ (command1; command2) >file

This one-liner uses the (commands) construct that runs the commands a sub-shell. A sub-shell is a child process launched by the current shell.

And then what happens here is the commands command1 and command2 go executed in the sub-shell, and fustigate redirects their output to file.

13. Execute commands in a vanquish through a file

Open up two shells. In trounce 1 do this:

mkfifo fifo exec < fifo

In shell two practise this:

exec 3> fifo; echo 'echo test' >&iii

At present take a look in shell 1. It will execute echo exam. You tin keep writing commands to fifo and shell 1 will keep executing them.

Hither is how it works.

In shell 1 we use the mkfifo control to create a named pipe called fifo. A named pipage (as well chosen a FIFO) is similar to a regular pipe, except that it's accessed as part of the file organisation. Information technology can be opened by multiple processes for reading or writing. When processes are exchanging data via the FIFO, the kernel passes all information internally without writing it to the file organisation. Thus, the FIFO special file has no contents on the file system; the file arrangement entry merely serves as a reference indicate so that processes tin admission the pipe using a name in the file organization.

Side by side we utilize exec < fifo to replace current shell's stdin with fifo.

At present in shell 2 we open the named pipage for writing and assign it a custom file descriptor 3. Next nosotros just write echo test to the file descriptor three, which goes to fifo.

Since shell ane's stdin is connected to this pipe it executes it! Really simple!

xiv. Access a website through fustigate

$ exec three<>/dev/tcp/world wide web.google.com/80 $ repeat -east "GET / HTTP/ane.i\n\n" >&3 $ true cat <&3

Fustigate treats the /dev/tcp/host/port as a special file. Information technology doesn't demand to exist on your system. This special file is for opening tcp connections through bash.

In this case we kickoff open file descriptor iii for reading and writing and point information technology to /dev/tcp/world wide web.google.com/80 special file, which is a connection to www.google.com on port 80.

Next we write GET / HTTP/i.1\n\n to file descriptor 3. Then we just read the response back from the same file descriptor past using true cat.

Similarly you tin create a UDP connection through /dev/udp/host/port special file.

With /dev/tcp/host/port you tin even write things similar port scanners in bash!

fifteen. Prevent overwriting the contents of a file when redirecting output

$ set -o noclobber

This turns on the noclobber option for the electric current beat. The noclobber option prevents yous from overwriting existing files with the > operator.

If you attempt redirecting output to a file that exists, you'll get an error:

$ plan > file bash: file: cannot overwrite existing file

If you're 100% sure that you want to overwrite the file, use the >| redirection operator:

$ program >| file

This succeeds as it overrides the noclobber pick.

16. Redirect standard input to a file and print it to standard output

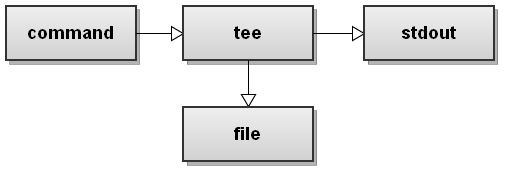

$ control | tee file

The tee command is super handy. Information technology's non part of bash but y'all'll apply it oftentimes. It takes an input stream and prints it both to standard output and to a file.

In this instance it takes the stdout of control, puts it in file, and prints information technology to stdout.

Here is a graphical illustration of how information technology works:

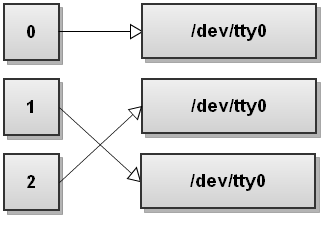

17. Ship stdout of one process to stdin of another process

$ command1 | command2

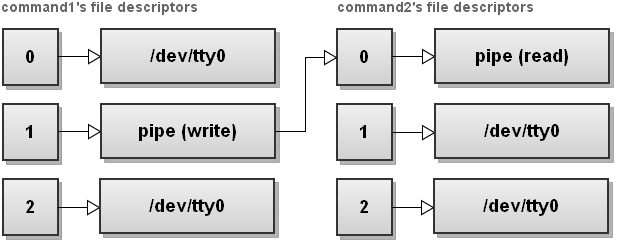

This is simple piping. I'thousand certain anybody is familiar with this. I'chiliad only including information technology here for completeness. But to remind you lot, a pipe connects stdout of command1 with the stdin of command2.

Information technology can exist illustrated with a graphic:

Every bit you lot can encounter, everything sent to file descriptor 1 (stdout) of command1 gets redirected through a pipage to file descriptor 0 (stdin) of command2.

You can read more about pipes in homo ii pipage.

18. Send stdout and stderr of one process to stdin of some other process

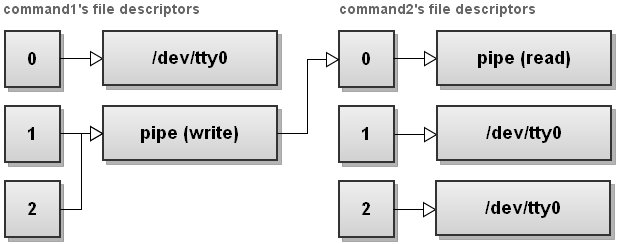

$ command1 |& command2

This works on bash versions starting 4.0. The |& redirection operator sends both stdout and stderr of command1 over a pipe to stdin of command2.

As the new features of bash 4.0 aren't widely used, the old, and more portable manner to exercise the same is:

$ command1 2>&1 | command2

Here is an illustration that shows what happens with file descriptors:

First command1's stderr is redirected to stdout, and and then a pipe is setup betwixt command1's stdout and command2'southward stdin.

19. Give file descriptors names

$ exec {filew}>output_file Named file descriptors is a characteristic of bash 4.one. Named file descriptors await like {varname}. Y'all can use them just similar regular, numeric, file descriptors. Bash internally chooses a gratis file descriptor and assigns information technology a proper noun.

20. Order of redirections

Yous can put the redirections anywhere in the command y'all want. Check out these 3 examples, they all do the same:

$ echo hello >/tmp/example $ repeat >/tmp/example howdy $ >/tmp/example echo hello

Got to love bash!

21. Swap stdout and stderr

$ command 3>&one 1>&2 ii>&iii

Hither we first duplicate file descriptor 3 to exist a re-create of stdout. So we duplicate stdout to be a copy of stderr, and finally we duplicate stderr to be a copy of file descriptor 3, which is stdout. Every bit a issue we've swapped stdout and stderr.

Let's get through each redirection with illustrations. Earlier running the command, nosotros've file descriptors pointing to the terminal:

Adjacent bash setups 3>&1 redirection. This creates file descriptor 3 to be a copy of file descriptor ane:

Next fustigate setups 1>&ii redirection. This makes file descriptor 1 to be a re-create of file descriptor two:

Adjacent fustigate setups 2>&three redirection. This makes file descriptor two to be a copy of file descriptor 3:

If nosotros want to exist nice citizens we can also close file descriptor 3 as it's no longer needed:

$ command iii>&one 1>&ii 2>&3 3>&-

The file descriptor table and then looks like this:

As you tin come across, file descriptors i and 2 have been swapped.

22. Send stdout to 1 process and stderr to another process

$ command > >(stdout_cmd) ii> >(stderr_cmd)

This one-liner uses procedure commutation. The >(...) operator runs the commands in ... with stdin connected to the read part of an anonymous named pipe. Bash replaces the operator with the filename of the anonymous piping.

And then for example, the first commutation >(stdout_cmd) might return /dev/fd/60, and the 2d commutation might return /dev/fd/61. Both of these files are named pipes that bash created on the wing. Both named pipes have the commands as readers. The commands wait for someone to write to the pipes so they tin can read the data.

The command and so looks like this:

$ control > /dev/fd/60 2> /dev/fd/61

At present these are merely simple redirections. Stdout gets redirected to /dev/fd/60, and stderr gets redirected to /dev/fd/61.

When the command writes to stdout, the process behind /dev/fd/threescore (process stdout_cmd) reads the data. And when the control writes to stderr, the process behind /dev/fd/61 (process stderr_cmd) reads the data.

23. Detect the exit codes of all piped commands

Allow's say you run several commands all piped together:

$ cmd1 | cmd2 | cmd3 | cmd4

And you want to discover out the leave status codes of all these commands. How practise you lot do it? There is no easy way to get the get out codes of all commands as bash returns only the exit lawmaking of the terminal command.

Bash developers thought almost this and they added a special PIPESTATUS assortment that saves the exit codes of all the commands in the pipe stream.

The elements of the PIPESTATUS array correspond to the go out codes of the commands. Here's an example:

$ echo 'pants are absurd' | grep 'moo' | sed 'due south/o/ten/' | awk '{ print $1 }' $ echo ${PIPESTATUS[@]} 0 1 0 0 In this example grep 'moo' fails, and the second chemical element of the PIPESTATUS array indicates failure.

Shoutouts

Shoutouts to bash hackers wiki for their illustrated redirection tutorial and bash version changes.

Savour!

Savor the article and let me know in the comments what you lot recollect about it! If you recall that I forgot some interesting bash one-liners related to redirections, let me know in the comments below!

Source: https://catonmat.net/bash-one-liners-explained-part-three

0 Response to "Read Input Until There Is No More Lines"

Publicar un comentario